This week, we will be using the Pac-Man interface developed by John DeNero and Dan Klein at UC Berkeley.

As in the previous lab, use Teammaker to form your team. You can log in to that site to indicate your partner preference. Once you and your partner have specified each other, a GitHub repository will be created for your team.

In this lab, we will again be exploring sequential decision problems that can be modeled as Markov Decision Processes (MDPs). However, in a reinforcement learning setting, much of the MDP is initially unknown to the agent. You will be implementing Q-learning agents that must explore their environments and try to discover the underlying model.

The starting point code includes many files for the GridWorld and Pac-Man interfaces. Most of these files you can ignore. The only files you will modify are:

For a refresher on the GridWorld interface, see our last lab.

Your primary task is to write a Q-learning agent that learns a policy for how to behave in the GridWorld and Pac-Man domains. This policy will be created from trial and error interactions with the environment through its update method. A stub of a Q-learner is specified in the file qlearningAgents.py in the class BasicQLearningAgent.

This Q-learning agent will maintain a table of its estimated value for all possible state-action combinations that it encounters in the environment. The agent knows the possible actions it can take, but does not know the possible states it will encounter. Any time the agent visits a new state for the first time, you'll need to add this state to the table with estimated values of 0 for all possible actions.

Start by implementing following methods for BasicQLearningAgent:

The update rule for Q-learning is:

Q(s,a) += lrate * (reward + discount * max[Q(s',a')] - Q(s,a))

Where lrate is the learning rate (a constant in the range [0,1]), discount is the discount factor (a constant in the range [0,1]), and s' is the next state.

Note: For explorationPolicy and greedyPolicy, you should break ties randomly for better behavior. In a particular state, actions that your agent hasn't seen before still have a Q-value, specifically a Q-value of zero. If all of the actions that your agent has seen before have a negative Q-value, then an unseen action may be optimal.

Also Note: The member variables self.learning_rate, self.discount, and self.explore_prob are initialized by the parent class.

Important: Make sure that you only access Q values by calling actionValue in your methods. This abstraction will be useful later when we will override actionValue in approximate Q-learning to use features of state-action pairs rather than state-action pairs directly.

With the Q-learning update in place, you can watch your Q-learner learn under manual control, using the keyboard:

python2 gridworld.py -k 5 -m

The -k will control the number of episodes your agent gets to learn. Watch how the agent learns about the state it was just in, not the one it moves to, and leaves learning in its wake.

Or if you'd rather watch the learning, do the following command where the -s controls the speed of the display (lower numbers reduce the speed of animation):

python2 gridworld.py -k 5 -s 0.2

Complete your Q-learning agent by implementing epsilon-greedy action selection in explorationPolicy, meaning it chooses random actions epsilon of the time, and follows its current best Q-values otherwise. Test your agent by doing:

python2 gridworld.py -k 100 -q

Your final Q-values should resemble those of the value iteration agent, especially along well-traveled paths. However, your average returns will be lower because of the random actions and the initial learning phase.

Once your Q-learning implementation is working on grid worlds, you are ready to move on to Pac-Man.

First, familiarize yourself with the Pac-Man interface. Try playing a game of Pac-Man by typing the following at the command line:

python2 pacman.py

In this case you are the agent that is controlling Pac-Man. Use the arrow keys to move Pac-Man around in his environment. The smaller white dots represent food. The goal of the game is to eat all of the food while avoiding the ghosts that are trying to thwart Pac-Man. If Pac-Man runs into a ghost, he dies. However, the larger white dots are special capsules that when eaten by Pac-Man make the ghosts safe to contact. The ghosts will only be safe for a short time, while they are colored white, but they eventually become dangerous again.

In your lab06 directory you'll see that you have a sub-directory called layouts. When you invoke the pacman game, by default it chooses the mediumClassic layout. However you can use command-line arguments to choose other layouts. For example:

python2 pacman.py -l smallGrid

Try other other layouts in the directory. Notice that these layouts are simply text files that follow a convention for designating the locations of walls, food, capsules, ghosts and pacman.

Note that pacman.py supports a number of options that can each be expressed in a long way (e.g., --layout) or a short way (e.g., -l). You can see the list of all options and their default values via:

python2 pacman.py -h

The Pac-Man agent can execute the following five actions:

What the Pac-Man agent can perceive is based on the methods of the GameState class which is defined in the file pacman.py. Open up this file and look through the options.

It is important to recognize that the game has a number of different agents (Pac-Man and the ghosts). Each agent in the game has a unique index; Pac-Man is always index 0, with ghosts starting at index 1.

Pac-Man can perceive:

In addition, Pac-Man can also determine given the action he chooses what the next state of the environment will be, by using the method generatePacmanSuccessor.

The file simpleAgents.py contains examples of three basic agents that control PacMan:

As you can see in this file, at a minimum, a Pac-Man agent must have a getAction method that takes in a state and returns a valid action.

To test these agents do:

python2 pacman.py -l openSearch -p RandomAgent python2 pacman.py -l openSearch -p ReflexAgent python2 pacman.py -l openSearch -p StateAgent

You will be creating a Pac-Man agent that chooses actions based on Q-learning's assessment of which action is likely to maximize long-term reward.

Time to use Q-learning to play some Pac-Man! Pac-Man will play games in two phases. In the first phase, training, Pac-Man will begin to learn about the values of positions and actions. Because it takes a very long time to learn accurate Q-values even for tiny grids, Pac-Man's training games run in quiet mode by default, with no GUI (or console) display. Once Pac-Man's training is complete, he will enter testing mode. When testing, Pac-Man's self.explore_prob and self.learning_rate will be set to 0.0, effectively stopping Q-learning and disabling exploration, in order to allow Pac-Man to exploit his learned policy. Test games are shown in the GUI by default. Without any code changes you should be able to run Q-learning Pac-Man for very tiny grids as follows:

python2 pacman.py -p PacmanQAgent -x 2000 -n 2010 -l smallGrid

Note that PacmanQAgent is already defined for you in terms of the QLearningAgent you've already written. PacmanQAgent is only different in that it has default learning parameters that are more effective for the Pac-Man problem (epsilon=0.05, alpha=0.2, gamma=0.8).

Your agent should usually win at least 70% of the games in the last 10 runs where the GUI is displayed.

Hint: If your QLearningAgent works for gridworld.py but does not seem to be learning a good policy for Pac-Man on smallGrid, it may be because your getAction and/or getPolicy methods do not in some cases properly consider unseen actions. In particular, because unseen actions have by definition a Q-value of zero, if all of the actions that have been seen have negative Q-values, an unseen action may be optimal.

Note: If you want to experiment with learning parameters, you can use the option -a, for example -a epsilon=0.1,alpha=0.3,gamma=0.7. These values will then be accessible as self.explore_prob, self.discount and self.learning_rate inside the agent.

While a total of 2010 games will be played, the first 2000 games will not be displayed because of the option -x 2000, which designates the first 2000 games for training (no output). Thus, you will only see Pac-Man play the last 10 of these games. The number of training games is also passed to your agent as the option numTraining.

During training, you will see output every 100 games with statistics about how Pac-Man is faring. Epsilon is positive during training, so Pac-Man will play poorly even after having learned a good policy: this is because he occasionally makes a random exploratory move into a ghost.

As a benchmark, it should take about 1,000 games before Pac-Man's rewards for a 100 episode segment becomes positive, reflecting that he's started winning more than losing. By the end of training, it should remain positive and be fairly high (between 100 and 350).

If you want to watch 10 training games just to see what's going on, use the command:

python2 pacman.py -p PacmanQAgent -n 10 -l smallGrid -a numTraining=10

Once Pac-Man is done training on 2000 games, he should win very reliably in test games, since now he is exploiting his learned policy.

However, you'll find that training the same agent on the seemingly simple mediumGrid may not work well. In our implementation, Pac-Man's average training rewards remain negative throughout training. At test time, he plays badly, probably losing all of his test games. Training will also take a long time, despite its ineffectiveness.

Pac-Man fails to win on larger layouts because each board configuration is a separate state with separate Q-values. He has no way to generalize that running into a ghost is bad for all positions. Obviously, this approach will not scale. In the next section we will see how to use an approximate version of Q-learning to improve performance on larger layouts.

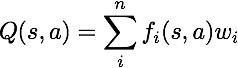

Next you will implement an approximate Q-learning agent that learns weights for features of states, where many states might share the same features.

Approximate Q-learning assumes the existence of a feature function f(s,a) over state and action pairs, which yields a vector f1(s,a) .. fi(s,a) .. fn(s,a) of feature values. We have provided some feature functions for you in featureExtractors.py.

The constructor of the ApproximateQAgent class in the file qlearningAgents.py includes a variable self.featureExtractor that is set from the command-line arguments. To access the features for a given state and action do:

self.featureExtractor.getFeatures(state,action)This will return a feature vector which is an instance of a util.Counter object. You can see the implementation of the Counter class in the util.py file. A Counter is like a dictionary that maps keys to values, but with some additional functionality. Any omitted key automatically has a value of zero. Also you can multiply, add, and subtract two Counter objects. A feature vector will map feature names such as 'closest-food' to a number representing the distance to the closest food pellet from Pac-Man's current position.

In the file qlearningAgents.py, complete the implementation of the ApproximateQAgent class as follows:

where each weight wi is associated with a particular feature fi(s,a). Implement this as the dot product of the weights and the features.

delta = reward + discount*max[Q(s',a')] - Q(s,a) wi += lrate * delta * fi(s,a)

Note that the delta term is the same as in normal Q-learning.

The ApproximateQAgent has an IdentityExtractor that assigns a single feature to every (state,action) pair. With this feature extractor, your approximate Q-learning agent should work identically to PacmanQAgent. You can test this with the following command:

python2 pacman.py -p ApproximateQAgent -x 2000 -n 2010 -l smallGrid -a extractor=IdentityExtractor

Important: ApproximateQAgent is a subclass of QLearningAgent, and it therefore shares several methods. Make sure that your methods in QLearningAgent call the method actionValue instead of accessing Q-values directly, so that when you override the method actionValue in your approximate agent, the new approximate Q-values are used to compute actions.

Once you're confident that your approximate learner works correctly with the identity features, you can try it with the custom feature extractor called SimpleExtractor, which depends on just four features:

Note: In order to keep weights in a reasonable range, all of these features are divided by 10.0.

Your approximate Q-learning agent with the SimpleExtractor should learn to win with ease on the mediumGrid:

python2 pacman.py -p ApproximateQAgent -a extractor=SimpleExtractor -x 50 -n 60 -l mediumGrid

Even much larger layouts, such as the mediumClassic should be no problem for your ApproximateQAgent (warning: this may take a few minutes to train).

python2 pacman.py -p ApproximateQAgent -a extractor=SimpleExtractor -x 50 -n 60 -l mediumClassic

If you have no errors, your approximate Q-learning agent should win almost every time with these simple features, even with only 50 training games.

When watching your trained approximate Q-learning agent using the SimpleExtractor, you probably noticed that he actively avoids ghosts, even when they are safe to eat. This is because the given feature extractor doesn't distinguish between safe and dangerous ghosts, so ghosts always look dangerous. If you try your approximate Q-learning agent on some of the hardest layouts like contestClassic and trickyClassic, the fact that Pac-Man is not seeking out and using the capsules and not eating safe ghosts hurts his performance.

In the file featureExtractors.py implement the BetterExtractor class to improve on SimpleExtractor. Remember that you can look in the file pacman.py at the methods in the GameState class, for information you can use in your extractor. You might want to consider incorporating:

Note: Each ghost state maintains a variable called scaredTimer. This timer is 0 when a ghost is dangerous to Pac-Man and greater than 0 when a ghost is safe for Pac-Man to eat.

Test your better extractor on the hardest Pac-Man layouts and see if it fares better than the simple one:

python2 pacman.py -p ApproximateQAgent -a extractor=BetterExtractor -x 50 -n 60 -l contestClassic

Be sure to write a clear comment with your BetterExtractor explaining what features it is focusing on.

This is an optional extension that you may wish to try. The idea is to use a more sophisticated exploration strategy then the epsilon-greedy approach. Here, we will use the familiar UCB formula that was an essential piece of selection in MCTS and apply it to a simulated crawler robot.

You will need to implement the following methods for UCBQLearningAgent:

Recall that the UCB formula is:

node_value + C * sqrt( ln(parent_visits)/node_visits )

For our purposes in this lab:

Here is a sketch of how to implement the exploration policy:

lookup values and visits for possible actions in current state

if some actions are untried

randomly pick one of those and return it

handle negative values by finding min value

shift all values by that min value

compute the UCB weights for each action

convert weights to probabilities

use numpy.random.choice (as we did in BeamSearch) to

select action based on probabilities and return it

Once your implememtation is complete, try running it:

python2 gridworld.py -k 100 -a ucb

With no additional code, you should now be able to run UCB Q-learning on a simulated crawler robot:

python2 crawler.py

If this doesn't work, you've probably written some code too specific to the GridWorld problem and you should make it more general to all MDPs.

The goal is for the crawler to discover how to move itself forwards across the simulation window. When it manages to reach the right side, it will wrap around and continue moving. At first progress will be very slow because it is exploring all of the possible actions. Gradually it will start to move a little faster. For instance, my solution took approximately 2400 steps to wrap around the first time. After lots of trial and error, the crawler should begin to move faster. My solution wrapped around the second time after a total of 3900 steps (so it only took 3900-2400=1500 steps the second time).

Be sure to use git to add, commit, and push the files that you modified.