Storage and the Memory Hierarchy

While designing and implementing efficient algorithms is probably the most critical aspect of writing programs that perform well, there's another, often overlooked factor that can have a major impact on performance: memory. Perhaps surprisingly, two algorithms with the same asymptotic performance (number of steps in the worst case) might perform very differently in practice due to the organization of the hardware on which they execute. Such differences typically stem from the way in which an algorithm accesses memory --- particularly where it stores data and what kinds of patterns are exhibited when accessing it. These patterns are referred to as memory locality, and to achieve the best performance, a program's access patterns need to align well with the hardware's memory arrangement.

When programming high-performance applications, you'll need to consider where your data is stored. Is it in registers? In CPU caches? In main memory? In a file on the disk? These devices all feature remarkably different access times, transfer rates, and storage capacities. You will also need to consider how frequently you access each device. For example, accessing a slow disk once as your program starts is typically not a major concern. On the other hand, accessing the disk frequently might slow down your program by a factor of 1000 or more!

This chapter characterizes a diverse set of memory devices and describes how they're typically organized in a modern PC. With that context, we'll see how a collection of varied memory devices can be combined to exploit the locality found in a typical program's memory access patterns.

Storage Devices

One of the most important ways we can classify storage devices is based on how they're accessed. Primary storage devices can be accessed directly by the CPU. That is, the CPU's assembly instructions provide a way to express the exact location of the data that the instructions should retrieve. Examples of primary storage include CPU registers and main memory (RAM), which can be accessed in IA32 assembly as %reg and (%reg), respectively.

In contrast, secondary storage devices cannot be directly referenced by CPU instructions. To access a secondary storage device, the desired data must first be copied from the device into primary storage. The most familiar types of secondary storage devices are disk devices (e.g., hard drives and solid state disks), which store your files. Other examples include floppy disks, magnetic tape cartridges, or even remote file servers.

While you may not have thought about the distinction between primary and secondary storage in these terms before, it's likely that you have encountered their differences in your programs already. For example, when you declare and assign ordinary variables (primary storage), you can immediately use them in arithmetic operations. When working with files (secondary storage), you're required to read values from the file into memory variables before you can access them.

Other important criteria for classifying memory devices arise from their performance and capacity characteristics. The three most interesting metrics are:

Capacity: The amount of data a device can store. Capacity is typically measured in bytes.

Latency: The amount of time it takes for a device to respond with data after it's instructed to perform a data retrieval operation. Latency is typically measured in either fractions of a second (e.g., milliseconds or nanoseconds) or CPU cycles.

Transfer rate: The amount of data that can be moved between the device and main memory over some interval of time. Transfer rate is also known as throughput and is typically measured in bytes per second.

Tables NNN and NNN characterize these important metrics across a range of common primary and secondary storage devices, respectively:

Table NNN: Primary storage device characteristics.

| Device | Capacity | Approx. Latency |

|---|---|---|

| Register | 4 - 8 bytes | <1 ns |

| CPU Cache | 1 - 16 Megabytes | 5 ns |

| Main Memory | 4 - 64 Gigabytes | 100 ns |

Table NNN: Secondary storage device characteristics.

| Device | Capacity | Latency | Transfer Rate |

|---|---|---|---|

| Flash Disk | 0.5 - 2 Terabytes | 0.1 - 1 ms | 200 - 2000 Megabytes / second |

| Traditional Disk | 0.5 - 10 Terabytes | 5 - 10 ms | 100 - 200 Megabytes / second |

| Remote Network Server | Varies considerably | 20 - 200 ms | Varies considerably |

You may be wondering: why is there such a huge disparity in device performance? The answer is a combination of distance and variations in the technologies used to implement the devices. Distance is a factor because ultimately all data needs to be available to the CPU's arithmetic components (e.g., the ALU) for processing. CPU Registers are placed as close to the ALU as possible to minimize the time it takes for a signal to propagate between the two. Thus, while they can only store a few bytes and there aren't many of them, the values stored in registers are available to the ALU almost immediately!

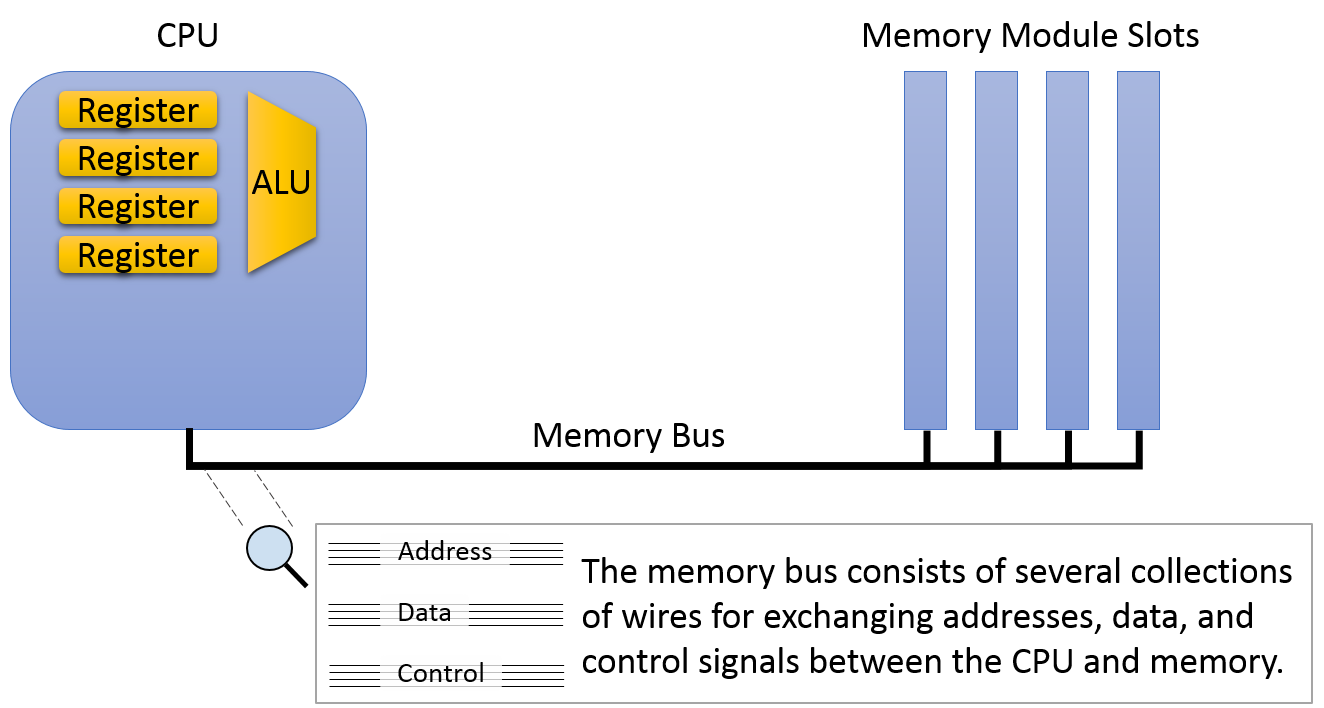

Main memory is located in memory modules that are outside the CPU. The CPU and memory modules are connected by a memory bus, which is depicted in figure NNN. To retrieve a value from memory, the CPU puts the address of the data it would like to retrieve on the memory bus and signals that the memory modules should perform a read. After a short delay (approximately 10 ns), the memory module will send the value stored at the requested address across the bus to the CPU.

Even though the CPU and main memory are physically just a few inches away from one another, the extra distance and circuitry between them drastically increases the access latency and reduces the throughput of memory as compared to registers. Of course, despite having lower performance than registers, main memory is still an essential component because it stores several orders of magnitude more data than can fit on the CPU. As we'll see with the other forms of storage, there is a clear trade-off between capacity and speed.

While you're likely to have directly interacted with registers and memory as you've learned to program in C or assembly language, you may be surprised to learn that there is another form of memory that exists between them. CPU cache (pronounced "cash") occupies that middle ground, both physically and in terms of its performance and capacity characteristics. A CPU cache typically stores 1-16 Megabytes of data and is located on the CPU, but physically it's not quite as close to the ALU as the registers. Thus, caches are faster to access than main memory, but they require a few more cycles than registers to make data available for computation.

Rather than the programmer explicitly loading values into the cache, control circuitry within the CPU will automatically store a subset of main memory's contents in the cache. Much of this chapter will focus on the design decisions that go into cache construction and the algorithms that should govern which data they store. As we'll see soon, our goal will be to strategically control which subset of main memory gets stored in the caches such that as many memory requests as possible can be serviced by the (much higher performance) cache!

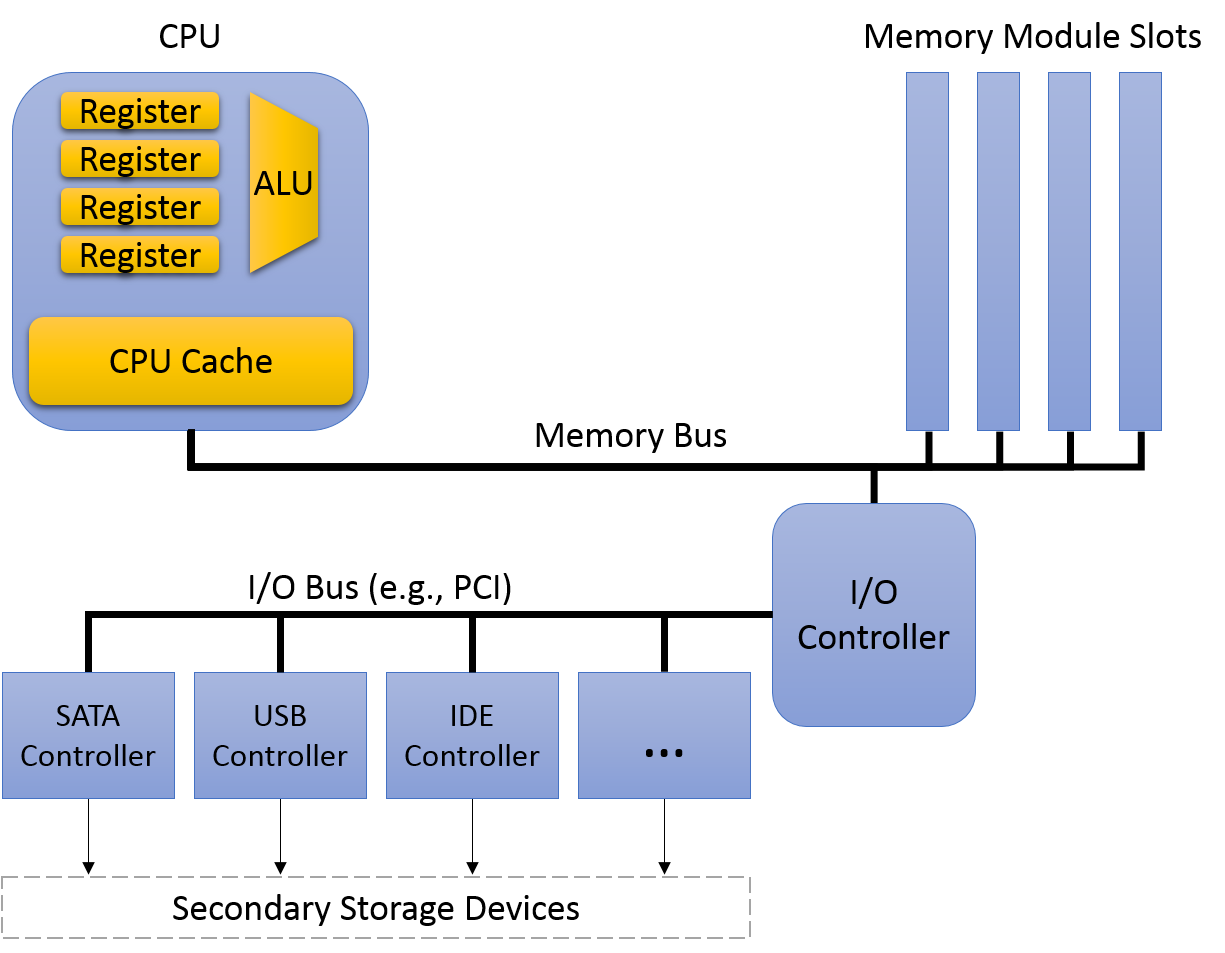

Secondary storage devices are even farther away from the CPU than main memory. Figure NNN displays how the path from secondary storage to main memory generally passes through several intermediate device controllers. For example, a typical hard disk connects to a Serial ATA controller, which connects to the system I/O controller, which in turn connects to the memory bus. All these extra devices make disks easier to use by abstracting the details of disk communication from the OS and programmer. However, the complexity they add when transferring data introduces delays to the transfers.

Finally, the technology underlying a device often has a significant impact on its performance characteristics. Registers and caches are built from relatively simple circuits, consisting of just a few logic gates. Their small size and minimal complexity ensures that electrical signals can propagate through them quickly, reducing their latencies. On the opposite end of the spectrum, traditional hard disks contain spinning magnetic platters that store hundreds of gigabytes. While they're very dense, access latency is relatively slow due to the requirements of mechanically aligning a disk head and rotating the platter into the correct position.