CS68 Problem Set for Hidden Markov Models

The goal of this week's lab is to obtain some practice working with Hidden Markov Models and also analyzing its performance. This lab will not be graded but will important practice for the Final exam. Solution Set is available here.

- Apply the forward algorithm to infer the probability of observing the sequence AGTT. Show each step of the recursion by filling a 2D matrix of forward probabilities f_k(i). Compute the backward probability as well showing your steps and the resulting backward matrix, b_k(i)

- Infer the most likely path through the states of the HMM for the sequence TATA. Again, show the steps of your algorithm. Your answer should indicate the optimal path as well as the probability of that path.

- Box 1: 2 apples, 2 oranges

- Box 2: 3 apples, 1 orange

- Box 3: 1 apple, 3 oranges

- Draw a hidden Markov model to represent this problem. Show a state diagram in addition to two-dimensional parameter arrays a (for transitions) and e (for emission probabilities).

- Compute the probability of seeing box sequence π = (1,1,3,3,2) and fruit sequence x = (A,A,O,O,A). Show your work.

- Compute the optimal set of boxes corresponding to the fruit sequence given in the previous problem (i.e., π*). That is, which box was each piece of fruit most likely to be selected from?

- How much better is your path than that given in Problem 4? Compute this

value by using a log-odds ratio; that is:

P(π*|x) log ---------- P(π_4|x)where the denominator is using the path from problem 4. - In class, we discussed the Cheating Dealer scenario where we have an HMM model of a dealer who alternates between using a fair and biased coin at a casino. We modeled the notion that the dealer choses which coin to use at every point in the sequence. Assume, instead, that the dealer only chooses a coin every 5 flips to make avoid suspicion. That is, if the dealer decides to use a fair coin, he/she will use that coin for the next five flips. Draw the corresponding HMM and describe any differences in either the forward or Viterbi algorithm, if any.

- Consider the choice of adding and END state to a model. Show that the use of end state decreases the probability of a sequence x, P(x), as x gets longer. Hint: start by considering two simple models, one with an end state and one without and pay attention to how the transition probabilites on the edges are affected

- In the applications in class, using an END state makes sense (e.g., a gene can't be infinite in size). Describe an application (does not have to be biological) where using an END state would not be useful

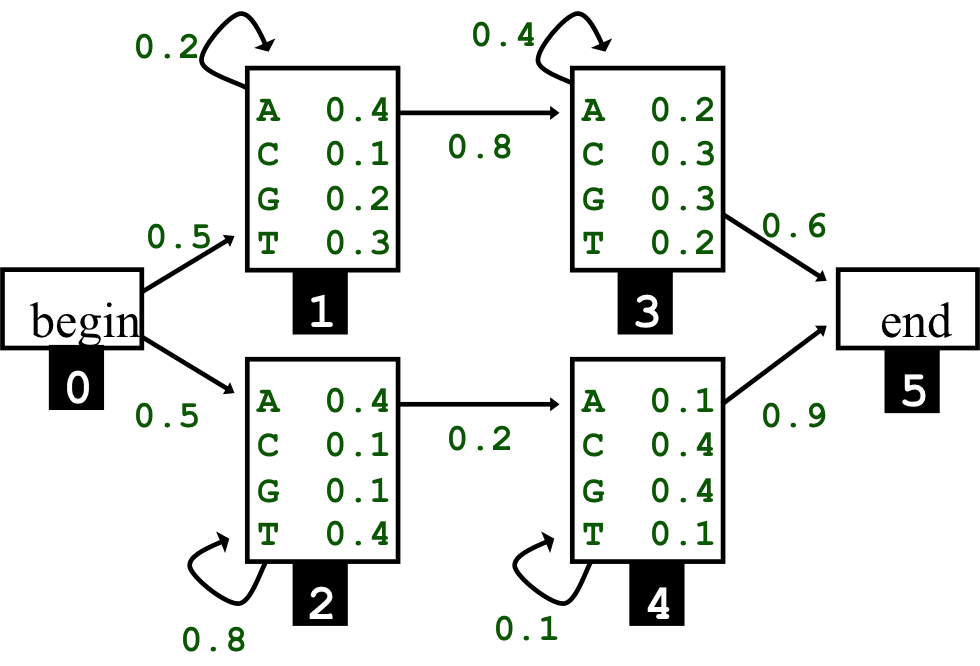

Above is an example HMM that we used in class. Use this HMM for the following questions:

For the following set of questions, consider the following problem. Assume you have three boxes, each containing a certain number of apples and oranges. At any point in time, you select a box at random, and then an fruit from that box (i.e., an apple or orange) and record your finding (A for apple and O for orange). You immediately replace the fruit so that the total number of apples and oranges stays the same over time, and repeat the process. Unfortunately, you forgot to write down the boxes you chose and simply have an account of apples and oranges. Assume the following quantity of fruits: