Use Teammaker to form your team. You can log in to that site to indicate your partner preference. Once you and your partner have specified each other, a GitHub repository will be created for your team.

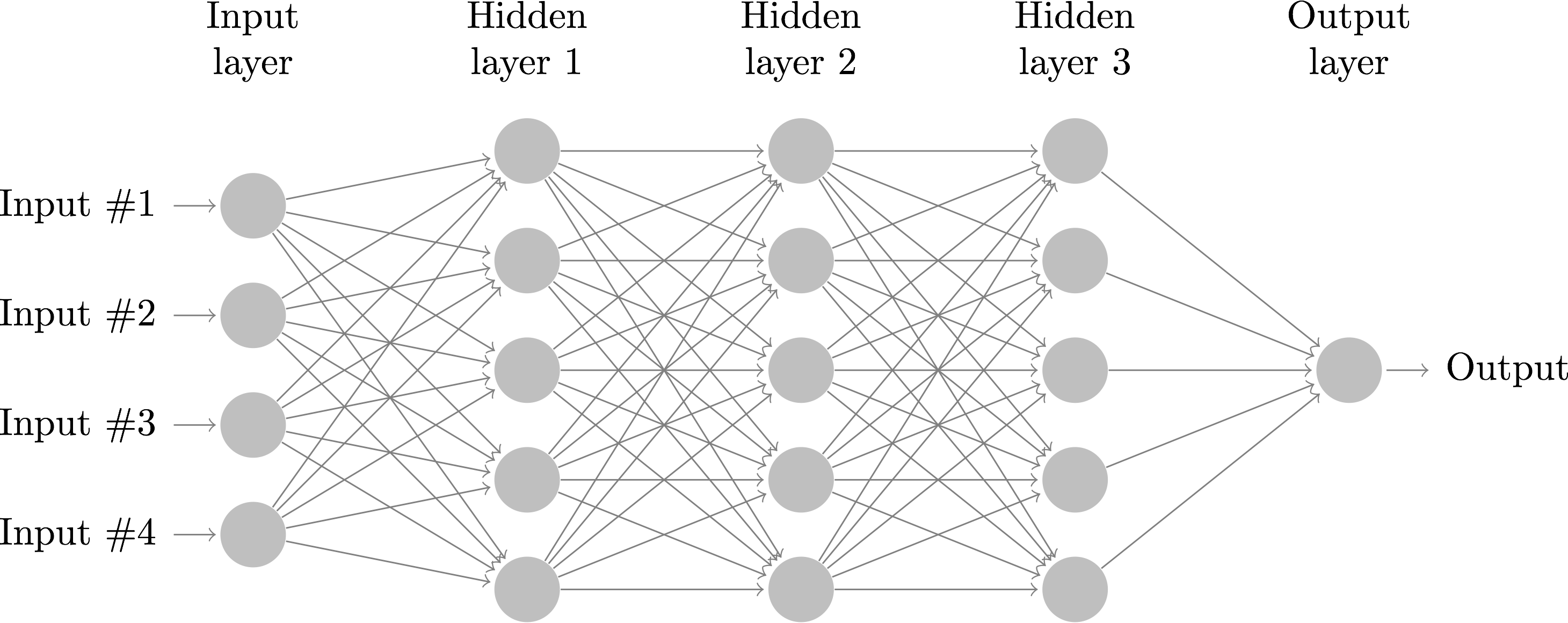

There are many good sofware packages that you can use to experiment with neural networks, such as tensorflow/keras, pytorch, or conx. However, before using these packages you should first understand how backpropagation works at a detailed level. Therefore, in this lab you will implement your own neural network from scratch.

Your neural network will be made up of a number of classes:

As you are working on your implementation, you should frequently try the provided unit tests:

python3 nn_test.pyThere are 16 unit tests in this file. At the very top of the output that is produced, are 16 characters summarizing your progress. An E indicates a runtime error, a F indicates a failure, and a . indicates a success.

Below is a short summary of the tasks you need to complete. Note that much more detail about all of these methods can be found in the comments within the file neural_net.py.

Initially you will focus on implementing the SigmoidNode class methods:

The first three functions store their results in the node's self.activation and self.delta fields. update_weights changes the weights of the edges stored in self.in_edges.

Next you will work on completing the Network class. It is already set up to initialize a neural network with input, bias, and sigmoid nodes. You must implement the following functions for the neural network:

The main function sets up a network with a single two-neuron hidden layer and trains it to represent the function XOR.

Once you can successfully learn XOR, move on to the next section.

In the latex document provided (called explanation.tex), fill in the diagram of the XOR network with the specific weights that were found by your program. Then explain how your network has solved the XOR problem. In particular, you need to clearly articulate:

To compile your latex document into a pdf, run the following command:

pdflatex explanation.texOr if you prefer, a Makefile has also been provided. Once compiled, you can then open the pdf from the command line with the command:

evince explanation.pdf

Note that the PDF viewer is a GUI program, so you'll need to be sitting at the machine you're using, or set up X-tunnelling. Alternatively, for remote access it may be more convenient to use SSHFS, which will allow you to build the PDF on a lab machine and then open open it with any PDF viewer on your local machine.

Another alternative is to upload the .tex file to overleaf, which is a cloud-hosted platform for making LaTeX documents. This has the advantage that you can edit your .tex document collaboratively (once uploaded, you can "share" the project with your lab partner), and see the PDF in your web browser (i.e. no need to install any software locally). However, please remember that you'll need to submit your explanation using GIT, so be sure to download the file, check that it compiles cleanly on the CS lab machines, and put the up-to-date version in your lab repo when you're done with it.

Be sure to include your name(s) in the title of the document.

Note that LaTeX is a very powerful markup language for producing nicely typeset documents; there's a lot of things you can do with it, but don't go crazy for this assignment. You should mostly be able to get by with making edits to the text in the template file, but there's lots of info on LaTeX available if you want to learn more about it. See the LaTeX section of the course Remote Tools page for more details about ways to use LaTeX, along with a brief intro to a few of the things you can do with it.

For more complete documentation and references, see my LaTeX intro page for links to further reading.

As an optional extension to the lab, I've provided an example of how to train a larger network on a more complex problem; it uses a handwritten-digit dataset imported from Sci-Kit Learn. Note that this network will likely take several hours to train, and still probably won't get much above 80-90% accuracy. Next week we'll try playing around with some heavily optimized neural network libraries that will give us much faster training times, but it's still important to understand how they work first.

digits_net.py

You're also welcome to try modifying the script to use different sklearn datasets, try different network architectures and parameter settings, etc.

You should submit the files neural_net.py and explanation.tex. As usual, use git to add, commit, and push your work.