CS81 Lab 5: Applying Novelty Search

In this lab you will apply your implementation of novelty search (invented by Lehman and Stanley) to a task of your choice and compare its performance to standard NEAT.

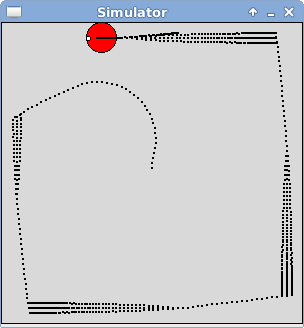

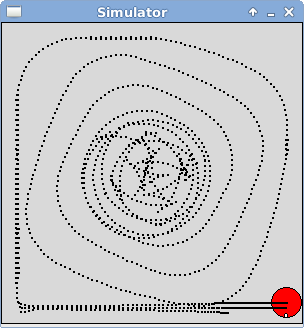

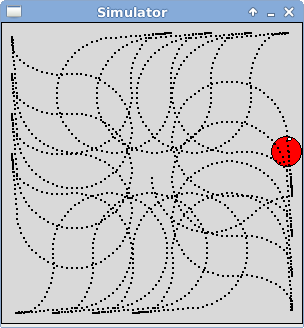

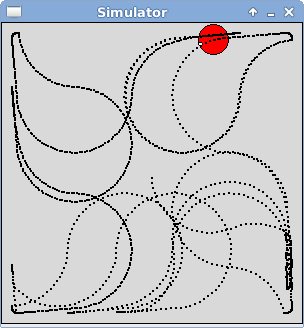

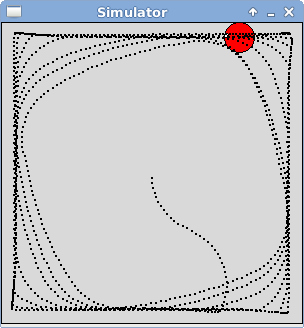

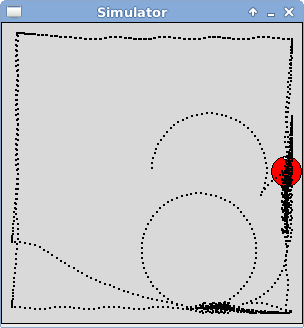

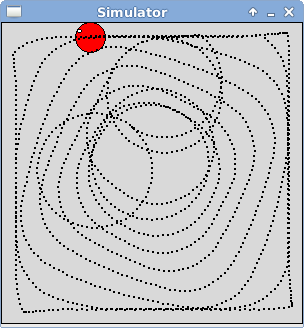

The goal of novelty search is to find unique behaviors, rather than optimizing a particular objective function. The pictures above show some example behaviors that were present in the archive at the end of one novelty search run with a population of 100 that was evolved for 50 generations. Note that these behaviors are not necessarily very good at coverage (with coverage scores of 27.5%, 78.2%, 86.7%, 66.7%, 52.9%, and 43.5%), however they are quite different from one another. For example, the first behavior repeatedly jams itself against a wall in short stutter step motions, while the second behavior traces out a small five-pointed star in the center of the world before spiraling outward.

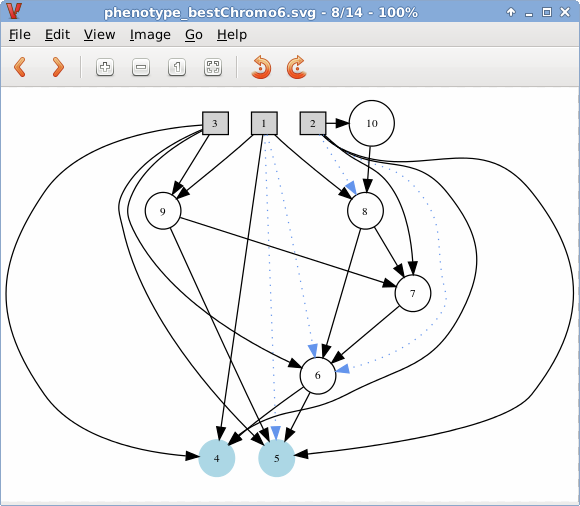

Despite the fact that novelty search is not optimizing an objective function, it is still able to find successful networks that perform coverage quite well (as demonstrated in the picture shown below left where coverage is 97.8%). The corresponding network topology is shown below right and contains:

- 3 inputs

Representing whether the agent is stalled, a normalized distance to the nearest wall in front, and a normalized count down timer (which is set initially at 1500 and is decremented on every action by the agent). - 5 hiddens

- 2 outputs

Representing translation and rotation amounts.

To complete this lab you will make a few minor changes to both

novelty search and your simulator. Then you will create programs

to evolve with novelty search and evaluate the neural networks

discovered. Finally you will run some experiments and write up your

results.

Go through the following steps to setup your directory for this lab.

- First you need to run

setup81to create a git repository for the lab.If you want to work alone do:

setup81 labs/05 none

If you want to work with a partner, then one of you needs to run the following while the other one waits until it finishes.setup81 labs/05 partnerUsername

Once the script finishes, the other partner should run it on their account. - For the next step only one partner should copy over the

starting point code. First you will copy over your novelty search

from last week. Next copy over your simulator from two weeks

ago. Finally, copy over files from my public directory.

cd ~/cs81/labs/05 cp ../04/noveltySearch.py . cp ../03/simulator.py . cp -r ~meeden/public/cs81/labs/05/* ./

- Whether you are working alone or with a partner, you should now add

all of the files to your git repo, commit, and push them as shown below.

git add * git commit -m "lab4 start" git push

- If you are working with a partner, your partner can now pull the

changes in.

cd ~/cs81/labs/05 git pull

Recall that the sparseness of a behavior is defined as the average distance from its k-nearest neighbors in the archive. In your novelty search code, you used maxint as the sparseness of the very first behavior added to the archive.

When combining novelty search with NEAT, we will be using the sparseness of a behavior as its fitness, and the fact that we used maxint will be problematic. In addition, every problem we apply novelty search to will have a different representation of behaviors, and therefore a different range of possible sparseness values. It will be helpful to normalize sparseness to a fixed range of [0, 1].

Update your noveltySearch.py program as follows:

- In the constructor, add another parameter called maxDistance and create a class variable to store this value. This variable represents that maximum distance possible between any two points in behavior space.

- In the sparseness method, when the archive is empty return 1.0 as the sparseness. Then calculate the average distance to the k-nearest neighbors and return this average divided by the new self.maxDistance variable. Dividing by the maximum distance will ensure that sparseness values are always in the range [0,1].

Modify your unit testing for novelty search so that it takes this update into account. Re-run your tests and verify that the code still works correctly.

Use git to add, commit, and push your changes.

Recall that in Lab 3 you used a fitness function that was based on coverage, which you calculated by imposing a grid on the world and recording the grid locations visited by the robot.

In this lab you will instead use a fitness function based on behavioral novelty, which we will define as: The new grid locations visited in sequential order. We will only record a grid location the first time it is visited. We will focus on grids that are 15x15. Therefore a behavioral description will be 225 points long. It is unlikely that any evolved network will visit every single grid location, thus we will need to pad the end of each behavior to ensure that they are all the same length.

Update the Agent class of your simulator.py program as follows:

- In the constructor create a self.behavior variable (initialized to the empty list) to store the new grid locations visited by the agent.

- Modify the updateGrid method to append new grid locations to this list when appropriate.

- Create a getBehavior method that uses the agent's self.gridSize variable to determine the proper length of the behavior and then returns the self.behavior list padded with enough (0, 0) points to achieve this.

Test that your simulator returns correct behaviors for various brains and grid sizes.

Use git to add, commit, and push your changes.

In Lab 3 you used several files to interface with NEAT. The configuration file, called vacuum_config, contained all of the key parameter settings for a NEAT run. Another, called evolveVacuum.py, was used to execute one run of evolution for the vacuum task. A third, called evaluateVacuum.py, was used to test the best neural networks discovered (and saved) by the evolutionary process.

In this lab you will use three similar files called novelty_config, noveltyEvolve.py, and noveltyEvaluate.py. I have provided starting point files for each of these.

- Open the file novelty_config.

Notice the changes that have been made to the parameters (see the comments at the top of the file) to make it work with novelty search. Remember that if you decide to change the size of the input layer, it is currently set to 3, you need to update it here.

- Edit the file noveltyEvolve.py. Assume that

throughout this lab you will be using a grid size of 15.

- Based on the grid size assumption, create a global variable called novSearch that instantiates a NoveltySearch object that uses a k of 15, an archive size of 100, a threshold of 0.25, a point length of 2, a behavior length of 225 (which is 15*15), and an appropriate maximum distance, which you'll need to figure out. For a grid size of 15, the maximum distance between one component pair of behavior points would be the distance between (0,0) and (14,14).

- Note that two additional global variables have been created called bestChromos and bestScore. Because novelty search is ignoring the objective function, which for this problem is coverage, you will use these variables to track the search progress at finding good vacuuming behaviors. The bestScore variable will store the best coverage score found so far. The bestChromos list will append the chromosome that produced the most recent best score found. After novelty search ends this will allow you to save these chromosomes and observe their behaviors.

- Read through the main function and be sure you understand all of the steps. At the end of this function, the best coverage chromosomes are saved with filenames having the prefix bestChromo, and the ten most recently added novel chromosomes are saved with filenames having the prefix novelty. When running your experiments this will allow you to analyze the results.

- Read through the noveltyFitness function and again make sure that you understand what is going on. Notice that in the call to checkArchive, you pass in the current chromo as the otherInfo.

- Open the file noveltyEvaluate.py.

You will use this program to evaluate the chromosomes saved by the evolution program. Read through and understand the code.

Once you updated the file noveltyEvolve.py you are ready to try a short evolutionary run (the number of generations is currently set to 5).

$ python noveltyEvolve.py

After it completes look at the output produced. Use the noveltyEvaluate.py program to observe some of the best and most novel behaviors found.

$ python noveltyEvaluate.py bestChromo0 $ python noveltyEvaluate.py novelty0

If everything appears to be working correctly you can move on to running longer experiments. Use git to add, commit, and push your changes.

Run a series of experiments comparing the original NEAT to Novelty+NEAT on a task of your choice. You may use the vacuuming task or you may come up with a new idea. If you decide to use the vacuuming task, feel free to play around with different inputs.

Run at least 10 experiments using each set up. Record at what generation the best performer on the objective fitness measure was found. Also record the complexity of the best performers found (in terms of number of hidden nodes and number of connections). Write a short paper comparing and contrasting the results. Describe any differences in the types of behaviors found, and include screen shots showing interesting results.

We would expect Novelty+NEAT to outperform NEAT for deceptive tasks, and otherwise we would expect NEAT to outperform Novelty+NEAT. Do your results match these expectations?

When describing experimental work it is crucial that you give details about all of the parameter settings used. Often times this might appear in a table or an appendix so that it doesn't interrupt the flow of the paper.

Send me a pdf of your paper by noon next Friday.

When you are completely done, be sure that you have pushed all of your changes. Use the git status command to verify this. If there are uncommitted changes, be sure to do another add, commit, and push cycle.