setup63 to create a git

repository for the lab. If you want to work alone do:

setup63-Lisa labs/05 noneIf you want to work with a partner, then one of you needs to run the following while the other one waits until it finishes.

setup63-Lisa labs/05 partnerUsernameOnce the script finishes, the other partner should run it on their account.

cd ~/cs63/labs/05 cp -r ~meeden/public/cs63/labs/05/* ./This will copy over the starting point files for this lab.

git add * git commit -m "lab5 start" git push

cd ~/cs63/labs/05 git pull

In this lab you will use neural networks on a number of classification tasks. First you will explore using neural networks to solve some of the simpler problems we discussed in class such as the logic problems AND, OR, and XOR. Next you will look at an auto-encoder problem where the network develops a binary-like representation in its hidden layer. Then you will experiment with a handwritten digit recognizer. Finally you will focus on classifying images of faces.

You will be writing up your answers to this lab as a LaTeX

document. The repo contains a starting point document for you in the

file lab5.tex. In order to convert the LaTeX document

into a pdf and view it do:

pdflatex lab5.tex evince lab5.pdfYou should track the LaTeX document in git. However, you should NOT track the resulting pdf file that you create. You will also be creating some short programs to set up and run your own classification task.

| File that will be evaluated: | |

lab5.tex |

Your answers to all of the lab questions go here |

| Files you'll use: | |

and-net.py |

Creates a two-layer network to solve AND |

or-net.py |

Creates a two-layer network to solve OR |

xor-net.py |

Creates a two-layer network that is unable to solve XOR |

xor-3layer.py |

Creates a three-layer network to solve XOR |

inputs.dat |

Contains the four input patterns used for all of the logic problems |

and-targets.dat |

Contains the four target patterns for AND |

or-targets.dat |

Contains the four target patterns for OR |

xor-targets.dat |

Contains the four target patterns for XOR |

8bit-net.py |

Creates a three-layer auto-encoder network |

8bit.dat |

Contains the eight input and target patterns for the auto-encoder problem |

digit-net.py |

Creates a three-layer network to classify handwritten digits |

digit-inputs.dat |

Contains the input patterns for the digits classifier |

digit-targets.dat |

Contains the target patterns for the digits classifier |

glasses-net.py |

Creates a three-layer network to classify images of people's faces |

sunglassesfiles |

File names of the images to be used to create the glasses training set (you will create this) |

glasses-inputs.dat |

Contains the input patterns for the glasses classifier (you will create this) |

glasses-targets.dat |

Contains the target patterns for the glasses classifier (you will create this) |

processingFaces.py |

A collection of functions to convert the raw image data into a form usable by a neural network |

| Supporting files you can read, but should not modify: | |

conx.py |

Implements neural networks |

newConx.py |

Enhances the original conx code by providing visualization |

In this section you will explore how neural networks perform on the basic logic problems AND, OR, and XOR. For these problems there are just four different input patterns (the x's), as shown in the table below:

| x1 | x2 | AND(x1, x2) | OR(x1, x2) | XOR(x1, x2) |

| 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 1 | 1 |

| 0 | 1 | 0 | 1 | 1 |

| 1 | 1 | 1 | 1 | 0 |

python -i and-net.pyNote that using the -i option when invoking python will execute the given filename and then leave you in the interpreter. It has just two inputs and one output, along with a bias. Before training, test the AND network's performance and look at its weights. Then train the network and re-test its performance and check out how the weights have changed. Do the weights make sense to you? Draw a picture of the network if that helps you visualize the solution. Exit from the interpreter.

python -i or-net.pyIt has the same structure as the previous network. Try all of the same commands as before. Make sure that the learned weights make sense to you. Exit from the interpreter.

python -i xor-net.pyAgain it has the same structure as the previous two examples, however when you train this network it will be unable to learn. You can do CTRL-C to interrupt the training. Exit from the interpreter.

python -i xor-3layer.pyIn this case the network has three layers (input, hidden, and output) instead of just two (input, output). To see all of the weights for this network requires two commands:

lab5.tex to show all of the

trained weights and biases. Then explain in the writeup how the

network has solved the problem based on them.

Remember that each unit in the network computes the following (where the x's represent the incoming activations and the w's represent the incoming weights): :

netInput = bias + Σxiwi

output = f(netInput)

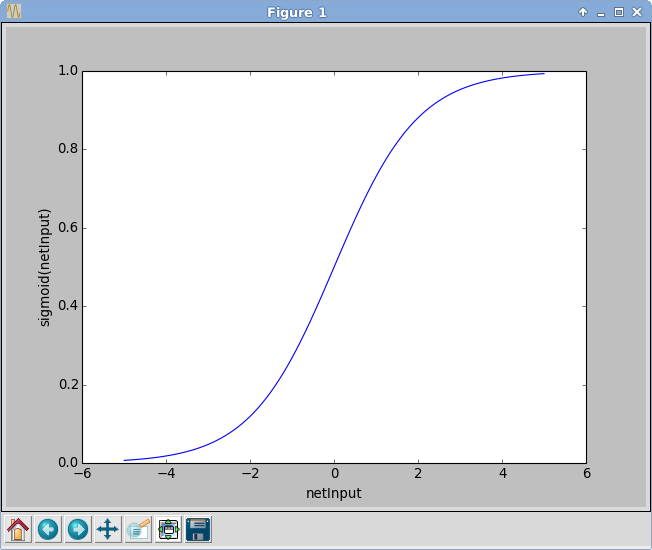

The default activation function f in this neural network

library is the sigmoid: 1/(1+e-netInput) which is plotted

below. So for example if the netInput for a particular unit

is 0, it would output the value 0.5. The more negative

the netInput, the closer the output gets to 0. The

more positive the netInput, the closer the

output gets to 1.

Look at section 4.6.4 starting at the bottom of page 106 in Tom Mitchell's Chapter 4 about hidden layer representations. We will be using bit patterns where a single bit is set to 1 and the rest are set to 0. We will focus on bit patterns of length 8 as discussed in the reading, so there are only 8 possible patterns.

Create a network that learns to take eight-bit patterns and reproduce them on the output layer after re-coding them in a three-unit hidden layer:

python -i 8bit-net.pyAfter training, use the showPerformance() method to inspect the hidden layer representations. In the file

lab5.tex write down each of the hidden layer

representations created by the network in the table provided. Then

convert them into bit patterns by rounding each hidden unit activation

to either 0 or 1. Discuss whether the network re-coded them using a

binary-like representation.

Create a network that learns to classify handwritten digits:

python -i digit-net.py

When you run this file it will open up additional windows so that you can visualize the input, hidden, and output activations as well as the hidden weights. Move the windows around so that you can see all of them. Each individual activation or weight is depicted as a grayscale box. The lighter the color the higher the value, the darker the color the lower the value.

This network takes 8x8 images as input, passes them through a 5-unit hidden layer, and classifies them in a 10-unit output layer. Each unit in the output layer is associated with one digit (0-9). For example, if the last unit is highly active, this means that the network is classifying the current image as the digit 9. In contrast, if the first unit is highly active, this indicates the network is classifying it as the digit 0.This data set contains 100 examples including images of:

This is the first data set in the lab where we've had enough data to create both a training set and a testing set. Notice that the splitData method has been used to randomly select 60% of the data set for training. You should train for a limited number of epochs, say 25, swap the data and evaluate the network's performance on the testing set. Continue swapping and training as long as the performance on the testing set is improving.

Pick one digit to focus on and analyze it in more depth. Choose

one that has a reasonable number of examples in the training set. Find

every instance of that pattern in the training set and record which

hidden units are active (0-4) in the table provided in the

file lab5.tex. Look at the hidden layer weights in the

displays. In lab5.tex describe how the network learned

to recognize this digit based on the hidden layer features it has

discovered. Include images of the hidden weights, if you'd like.

We will be using the same images of faces that were discussed in this week's reading. Look at section 4.7 from Tom Mitchell's Chapter 4 (pages 112-116).

The directory /home/meeden/public/cs63/faces_4/ contains 624 images stored in PGM format. Do NOT copy these images to your own directory. You can view one of these images using the xv command. Each file is named according to the following convention:

userid_pose_expression_eyes_scale.pgm

Using a neural network to learn a classification task involves the following steps: determining a task, gathering appropriate data, creating the training set of inputs and targets, creating a network with the appropriate topology and parameter settings, training the network, and finally analyzing the results. Each of these steps is explained in more detail below. After you have tried these steps on the sunglasses example, you will repeat this process on a task of your choosing.

cd /home/meeden/public/cs63/faces_4 ls -C1 *straight*neutral* > ~/cs63/labs/05/sunglassesfilesUsing the unix command wc ~/cs63/labs/06/sunglassesfiles we can see that this file has 40 lines in it. We may want a slightly larger training set. So let's add in all the images where a person is looking straight ahead and has a happy expression. We can do this by again using ls to select these images and using two greater than signs to append these additional names to the end of the same file:

ls -C1 *straight*happy* >> ~/cs63/labs/05/sunglassesfilesUsing the wc command again we see that we now have 79 images. Once your sunglasses file is correct, use git to add, commit, and push it.

Execute this file by doing:

python processingFaces.pyIt will create two files called glasses-inputs.dat and glasses-targets.dat. Again use the wc command to verify that both files contain 79 lines. Once they are correct, use git to add, commit, and push them.

Execute this file by doing:

python -i glasses-net.pyThis will produce a number of windows. Move them around so that you can see them all.

>>> n.train(5) Epoch # 1 | TSS Error: 15.3588 | Correct: 0.0000 Epoch # 2 | TSS Error: 15.2270 | Correct: 0.0000 Epoch # 3 | TSS Error: 15.2524 | Correct: 0.0000 Epoch # 4 | TSS Error: 15.1156 | Correct: 0.0000 Epoch # 5 | TSS Error: 14.8360 | Correct: 0.0000 Reset limit reached; ending without reaching goal ---------------------------------------------------- Final # 5 | TSS Error: 14.8360 | Correct: 0.0000 ----------------------------------------------------You can see that error is dropping, but so far the network has not learned to respond correctly to any of the training patterns. NOTE: because each network is initialized with different random weights your training results will vary. Let's check on how well the network is doing on the test patterns.

>>> n.swapData() Swapping training and testing sets... 19 training patterns, 60 test patterns >>> n.evaluate() network classified image #0 (sunglasses) as ??? network classified image #1 (sunglasses) as ??? network classified image #2 (eyes) as ??? network classified image #3 (sunglasses) as ??? network classified image #4 (eyes) as ??? network classified image #5 (eyes) as ??? network classified image #6 (eyes) as ??? network classified image #7 (eyes) as ??? network classified image #8 (eyes) as ??? network classified image #9 (eyes) as ??? network classified image #10 (sunglasses) as ??? network classified image #11 (sunglasses) as ??? network classified image #12 (eyes) as ??? network classified image #13 (eyes) as ??? network classified image #14 (eyes) as ??? network classified image #15 (eyes) as ??? network classified image #16 (eyes) as ??? network classified image #17 (sunglasses) as ??? network classified image #18 (eyes) as ??? 19 patterns: 0 correct (0.0%), 19 wrong (100.0%)As expected, it is not responding correctly to these either. Let's swap the data back, continue training, and then re-test.

>>> n.swapData() Swapping training and testing sets... 60 training patterns, 19 test patterns >>> n.train(5) Epoch # 1 | TSS Error: 14.2245 | Correct: 0.0000 Epoch # 2 | TSS Error: 12.9923 | Correct: 0.0000 Epoch # 3 | TSS Error: 10.3263 | Correct: 0.0000 Epoch # 4 | TSS Error: 7.8044 | Correct: 0.0833 Epoch # 5 | TSS Error: 5.8430 | Correct: 0.3500 Reset limit reached; ending without reaching goal ---------------------------------------------------- Final # 5 | TSS Error: 5.8430 | Correct: 0.3500 ---------------------------------------------------- >>> n.swapData() Swapping training and testing sets... 19 training patterns, 60 test patterns >>> n.evaluate() network classified image #2 (eyes) as ??? network classified image #4 (eyes) as ??? network classified image #5 (eyes) as ??? network classified image #6 (eyes) as ??? network classified image #7 (eyes) as ??? network classified image #8 (eyes) as ??? network classified image #9 (eyes) as ??? network classified image #10 (sunglasses) as ??? network classified image #12 (eyes) as ??? network classified image #13 (eyes) as ??? network classified image #14 (eyes) as ??? network classified image #15 (eyes) as ??? network classified image #16 (eyes) as ??? network classified image #18 (eyes) as ??? 19 patterns: 5 correct (26.3%), 14 wrong (73.7%)Clearly the performance of the network is improving. After several more iterations of this process of swapping the data and additional training, the network will start performing well on both the training set and the testing set and learning can be stopped. Remember the goal is to achieve good performance on the training set while still being able to respond appropriately to the novel data in the testing set.

After you have successfully completed the sunglasses example, repeat this process (Steps 1-6 above) on a task of your choice using some subset of the faces image data. In the file lab5.tex explain in detail what you did in each step.

Creating a successful classifier often requires an iterative process. You may find that you need more hidden units. You may find that you need more training examples. Some problems you'd like to try (such as recognizing the emotions in the images) may be too difficult to solve with these down-sized images.

Once you have successfully created a new classifier, take screen

shots of your resulting hidden weights and save them in files

called hidden1.png, hidden2.png, and so on.

One method of getting screen shots is to right click the mouse on the camera icon at the bottom of your screen. Select properties and then select "Active Window" under "Region to capture". You will only need to do this step once. From now on, it will always grab the active window. Then go to one of the conx weight windows and left click the mouse to make it the active window. Finally left click on the camera to get the screen shot. Include these hidden files as figures in your lab writeup.