cd ~/cs63/labs git clone git@github.swarthmore.edu:...

The objectives of this lab are to:

You will implement the following local search methods:

You will need to modify the following python files:

A traveling salesperson tour visits each of a collection of cities once before returning to the starting point. The objective is to minimize the total distance traveled. We will represent a tour by listing cities in the order visited. Neighbors of a tour are produced by swaping the positions of any two cities in the ordering.

The class TSP in the file TSP.py represents a problem instance and provides several useful methods. TSP.random_tour() returns a random state from which we can begin a local search. TSP.cost(tour) evaluates the total distance traveled to complete a tour. TSP.get_neighbor(tour) calls functions you will write to return a new tour in which two cities have swapped positions. TSP.py also has a Tour class for representing specific tours. The function Tour.new_tour() takes a pair of cities to swap and generates the corresponding neighbor tour.

A TSP instance is constructed by passing in the name of a JSON file specifying the latitude and longitude of locations that must be visited. Example JSON files are located in the coordinates/ folder. The __init__() method also takes a string specifying how to select a neighboring state: passing "best" or "random" will determine what gets called by the TSP.get_neighbor() method.

The TSP class leaves three methods for you to implement:

Note that all of these method names begin with a single underscore. This is a python convention for indicating a 'private' method. Python doesn't actually distinguish between private and public class members, but the underscore lets programmers know that the method isn't intended to be called directly. In this case, your local search functions should try not to call these methods. Instead, they should call TSP.get_neighbor(), which will point to either _best_neighbor() or _random_neighbor() based on how __init__ was called. One exception is when hill climbing requires a random move. In this case, you may call TSP._random_neighbor() directly.

You are strongly encouraged to write a main function for TSP.py in order to test your implementation of the neighbor functins.

tour = tsp.random_tour() cost = tsp.cost(tour) return tour, costThis will allow you to run HillClimbing.py without generating errors:

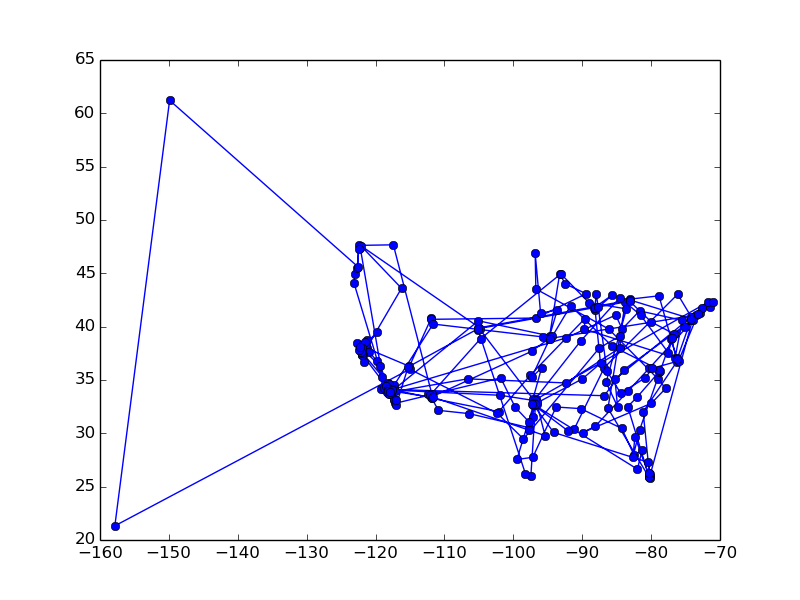

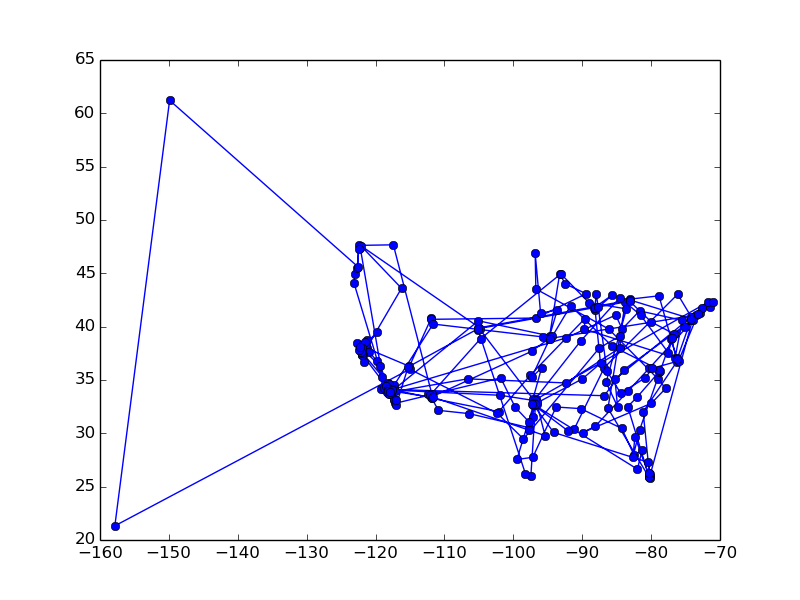

./HillClimbing.py coordinates/United_States_25.jsonDoing so will print the random tour and its cost, and will also create a file that plots the tour in latitude/longitude coordinates. By default, the plot is saved in the file map.pdf, but you can change this with the -plot command line argument:

./HillClimbing.py coordinates/India_15.json -plot random_tour.pdfYour job is to implement the following hill climbing algorithm:

state = random tour

best = state

while evals < MAX_EVALS:

if random restart:

state = random tour

else if random move:

state = random neighbor of state

else:

neighbor = generate (best or random) neighbor of state

if cost(neighbor) < cost(state):

state = neighbor

if cost(state) < cost(best):

best = state

end while

return best, cost(best)

Note that because the TSP class is counting the number of times cost() gets called, you should save the value rather than making another function call whenever possible.

Several of the parameters used above are specified in a config file.

By default, HillClimbing.py reads HC_defaults.josn.

You should copy this file and modify it, passing in the new file via the -config command line argument:

cp HC_defaults.json HC_config.json *edit HC_config.json* ./HillClimbing.py coordinates/South_Africa_10.json -config HC_config.json

The config file for simulated annealing includes a slightly different set of parameters. In particular, config["INIT_TEMP"] specifies the starting temperature, and config["TEMP_DECAY"] gives the geometric rate at which the temperature decays. The RUNS and STEPS parameters specify how many random starting points simulated annealing should be run from, and how long each run should last.

Stochastic beam search considers all neighbors of all individual candidates in the population and preserves each with probability proportional to its temperature. It is recommended that you add a helper function for beam search that evaluates the cost of each neighbor and determines its temperature score, then randomly selects the neighbors that will appear in the next iteration. Remember to avoid excess calls to cost by saving the value whenever possible. For the larger maps, the number of neighbors grows quite large, so it may be impractical to evaluate the cost of every neighbor. Your helper function should therefore consider only a random subset of each individual's neighbors. The size of this subset is given by the MAX_NEIGHBORS parameter in the beam search config file.

A very useful python function for stochastic beam search is numpy.random.choice. This function takes a list of items to choose from, a number of samples to draw (with replacement), and another list representating a discrete probability distribution over the items. It returns a list of items that were selected. Here is an example:

>>>import numpy.random

>>>import collections

>>>items = ["A", "B", "C", "D"]

>>>probs = [0.1, 0.2, 0.3, 0.4]

>>>for i in range(5):

>>> choices = numpy.random.choice(items, 10, p=probs)

>>> counts = collections.Counter(choices)

>>> print choices

>>> print counts, "\n"

['C' 'D' 'D' 'B' 'D' 'C' 'D' 'C' 'B' 'D']

Counter({'D': 5, 'C': 3, 'B': 2})

['D' 'C' 'C' 'D' 'D' 'B' 'D' 'B' 'B' 'B']

Counter({'D': 4, 'B': 4, 'C': 2})

['D' 'D' 'C' 'B' 'D' 'B' 'B' 'C' 'C' 'B']

Counter({'B': 4, 'C': 3, 'D': 3})

['C' 'D' 'B' 'B' 'B' 'B' 'C' 'C' 'C' 'B']

Counter({'B': 5, 'C': 4, 'D': 1})

['C' 'B' 'D' 'C' 'C' 'A' 'D' 'C' 'C' 'C']

Counter({'C': 6, 'D': 2, 'A': 1, 'B': 1})

The documentation for numpy.random.choice has more information about how this works.

You may also use numpy.random.multinomial if you prefer.

You should not focus your exploration on the number of random restarts. Increasing this parameter will generally produce slightly better results, but the marginal impact of finding good values for other parameters, like the temperature, should be much higher (especially as a function of computation time).

You are encouraged to try automating this exploration process. Parameter tuning for new algorithms is in fact one of the most common uses of local search! However, if you plan to run long jobs on lab machines please be courteous to other users. Monitor your memory usage with the program htop, and make sure that python isn't hogging all of a lab machine's memory unless you are sitting at that machine. When running long jobs on a shared machine, it also helps to use the nice command to give other users prioity, but nice does not absolve you from the need to monitor your memory footprint.

git add *.py best_params.txt git commit git push