CS21 Week 11: Sorting

Week 11 Topics

-

Binary Search Implementation

-

Search Performance

-

Sorting

Monday

Search Timing Comparison

We’ve implemented both linear search and binary search in a small

program that measures their performance (in terms of wall-clock time).

We’ll share these files with you (timeSearch.py and

timeBinarySearch.py), and we’ll run them to see how long searching

takes as we vary the size of the input list.

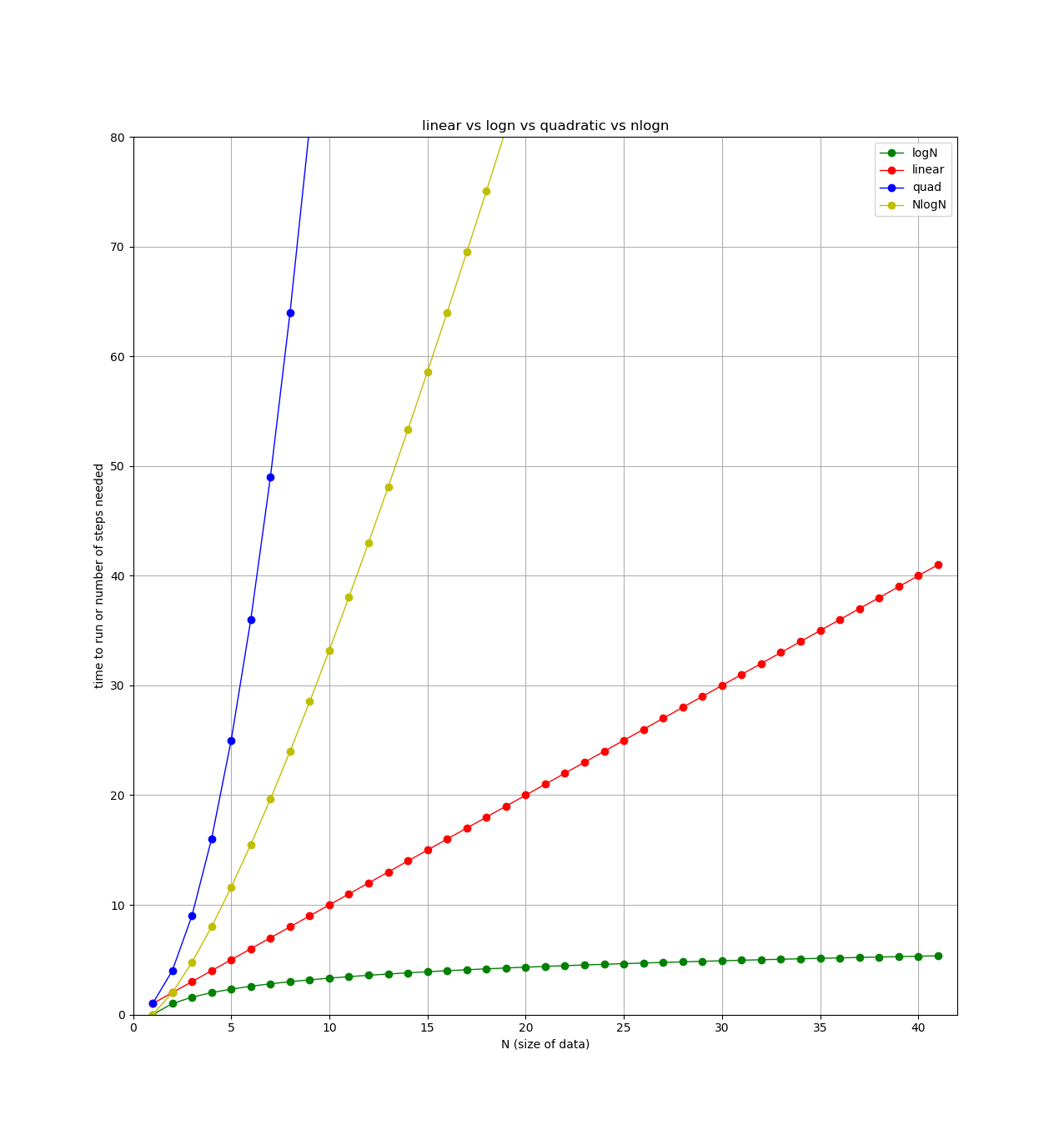

Plotting expected timing

We can also calculate how long we would expect algorithms of different efficiencies to take on different size problems:

Sorting

Binary search only works on a sorted list. Last week, we used python’s built-in sort() method for lists to perform the sorting task. This works fine, but does not provide any insight into how a sorting algorithm works. This week, we’ll explore techniques for implementing our own sorting algorithm using the CS21 skills we already know.

Suppose you have an unsorted pile of exams, and you need to sort them alphabetically by name before you can enter them in a grading spreadsheet. For example, let’s say your exams are in this order:

| Index | Name |

|---|---|

0 |

Tia |

1 |

Andy |

2 |

Ben |

3 |

Rich |

4 |

Vasanta |

5 |

Ameet |

6 |

Kevin |

7 |

Lila |

8 |

Joshua |

9 |

Zach |

10 |

Xiaodong |

Imagine these names are in a list, where "Tia" is at index 0, "Andy" is at

index 1, etc.

-

Can you come up with an algorithm to sort them? Note: your algorithm can’t "just look at the name" to determine where an exam goes, it must systematically compare exams until every exam is in the right place.

-

One small routine we can use is to look at any two names in the list and compare them. If they are out of order, we can swap their locations in the list. By repeatedly comparing multiple items in the list and swapping out of order items, we can gradually make the list sorted.

-

What is the worst-case number of comparisons your algorithm would take?

Swap

When humans look at this problem, we may be able to look at multiple items at once and move large groups of items that are already in sorted order to the proper location. This concept is hard to express algorithmically in a computer language, so instead we focus on a simpler operation that swaps only two elements at a time.

The swap(lst, i, j) function will swap two items at positions i

and j in a list lst. Once we have this small helper function

working, we can use it to implement some sorting routines by comparing

two elements at a time using Boolean relational operators, and calling

swap() if the elements are out of order. For example:

#assume i < j and we want to sort items in increasing order

if lst[i] > lst[j]: #if items are out of order

swap(ls, i, j)In general, we will need to perform several swaps to sort an arbitrary list.

Wednesday/Friday

Selection Sort

The key idea of selection sort is that it repeatedly selects the minimum item remaining in the unsorted portion of the list and then swaps it into its final sorted location.

Consider how selection sort would operate on following list:

[5, 3, 8, 0, 4, 1, 7] 0 1 2 3 4 5 6

When it begins, the entire list is unsorted. Therefore, the goal is to

get the minimum item in the list into position 0. To do this it keeps

track of the indexOfMin. It always starts this variable at the first

index of the unsorted portion of the list, and updates it whenever it

finds a smaller item. In this way, we can do a linear search to find

the index of the smallest item in the unsorted portion of the list.

The indexOfMin starts at 0. Since 3 < 5, indexOfMin is updated to

location 1. Since 8 is not < 3, indexOfMin is unchanged. Since 0 < 3,

indexOfMin is updated to location 3. All other locations in the list

will be checked to see if their contents are < 0. No further updates of indexOfMin are needed as 0 in position 3 is the smallest element/

After the entire unsorted portion of the list has been checked, one

swap is done between locations indexOfMin and the location at the

beginning of the unsorted portion. The current ordering in the list

will become:

[0, 3, 8, 5, 4, 1, 7] 0 1 2 3 4 5 6

Let’s redraw this to make it clear where the division between the sorted and unsorted portions of the list occurs.

[0, ||| 3, 8, 5, 4, 1, 7] 0 1 2 3 4 5 6 sorted unsorted

Next, selection sort will repeat the process described above to find the index of the minimum element in the usorted portion of the list, now starting at index 1. It will

find that the indexOfMin is now 5, and it will swap the items at

locations 1 and 5. Giving us this new list ordering:

[0, 1, ||| 8, 5, 4, 3, 7] 0 1 2 3 4 5 6 sorted unsorted

Notice that the sorted portion of the list is growing by one more item each iteration through selection sort.

What would the list look like after the next iteration?

[0, 1, 3, ||| 5, 4, 8, 7] 0 1 2 3 4 5 6 sorted unsorted

Selection sort can be implemented as a loop within a loop, where the inner loop is used to find indexOfMin, and each iteration of the outer loop identifies the smallest element in the unsorted portion of the list and swaps it into place.

How many steps does it take to run selection sort?

We need to first find the indexOfMin in a list of \(N\) elements. Finding

the indexOfMin is a linear search, so it takes \(N\) steps. Then we need

to find indexOfMin in the unsorted portion of the list — a list of

\((N-1)\) elements. This takes \((N-1)\) steps. Finding the next minimum

element takes \((N-2)\) steps, and so on.

The toal number of steps that selection sort takes is \(N + (N-1) + (N-2) + ... + 2 + 1\). The total sum is \(\frac{N^2+N}{2}\). Note that the highest order term is quadratic (\(\frac{N^2}{2}\)). We therefore say that selection sort is a quadratic time sort algorithm. Intuitively, when we double the size of the list we are sorting, selection sort will take \(2^2=4\) times as long to run. How does selection sort compare to linear search or binary search?

Bubble Sort

Another approach to sort a list is to scan over the list and just

compare adjacent elements — elements at index i and i+1. If

they are out of order, we will swap. But one scan over this list may

not be enough. So we can perform multiple scans until the list is

sorted. A list will be sorted when we perform zero swaps while

scanning the list.

keepGoing = True

while keepGoing == True:

#Optimistically assume we are done

keepGoing = False

for each adjacent pair of items:

if out of order:

swap

keepGoing = True #another scan is neededLike selection sort, bubble sort is implemented as a loop within a loop, where the amount of work can be described as the summation \(N + (N-1) + (N-2) + ... + 2 + 1\). The has the same quadratic runtime, so unfortunately, bubble sort does not improve on the worst case running time of selection sort. Note that in situations where the list is nearly sorted, bubble sort may not find many items out of order and only a few passes of the outer loop may be needed. In some cases, this may result in a faster sort.

Summary

Bubble sort and selection sort are both examples of quadratic sort algorithms. In the case of searching it we found that binary search was a considerable improvement over linear search, provided the list was already sorted. Sorting is computationally more time consuming than searching but we can sort once and perform many searches on a the sorted list.

A natural question to ask is if there are any sorting algorithms that are better than quadratic. The short answer is yes, and in fact, python’s built in sort() performs much better than either bubble sort or selection sort. We need a few more skills to explore these improved algorithms.